Hand Gesture Recognition Model using Deep Learning

Abstract

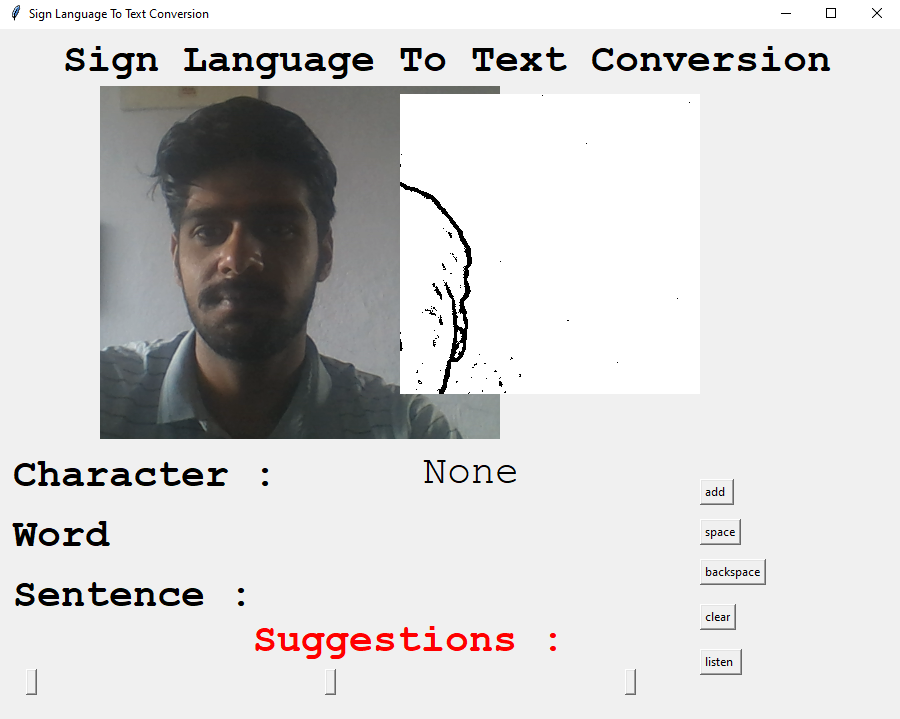

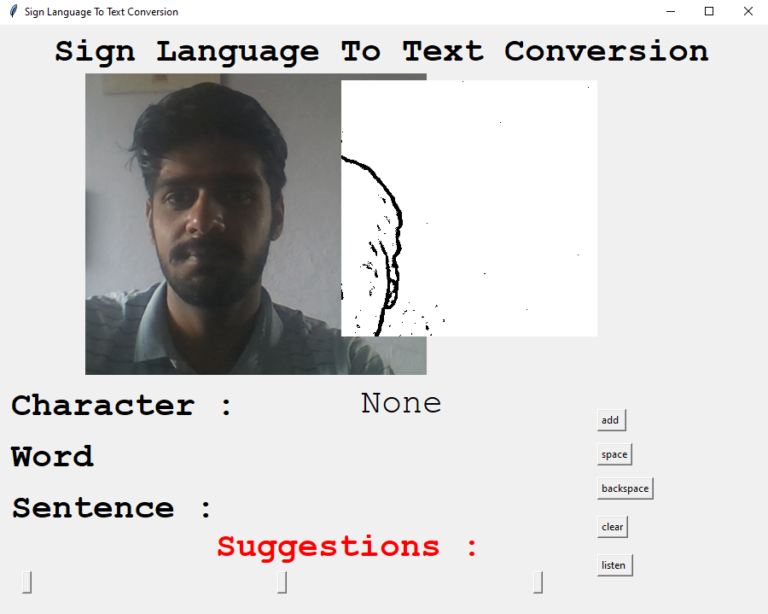

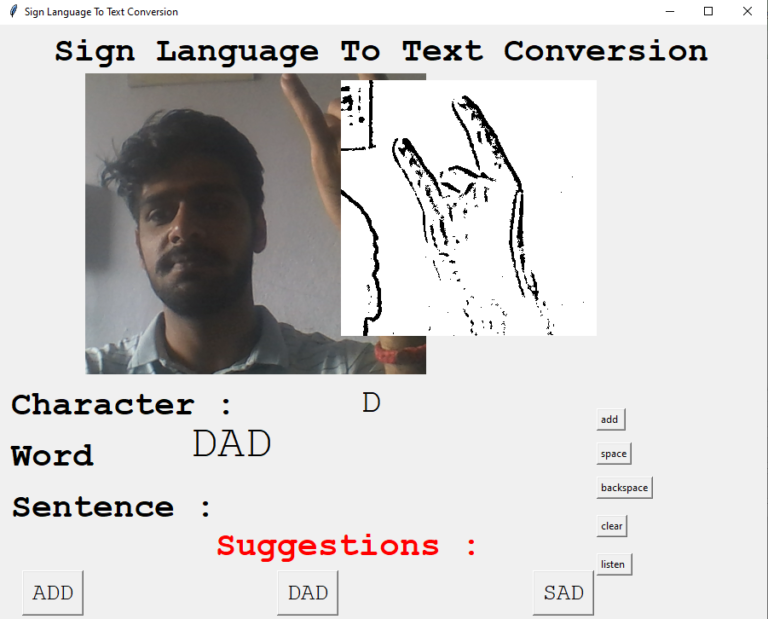

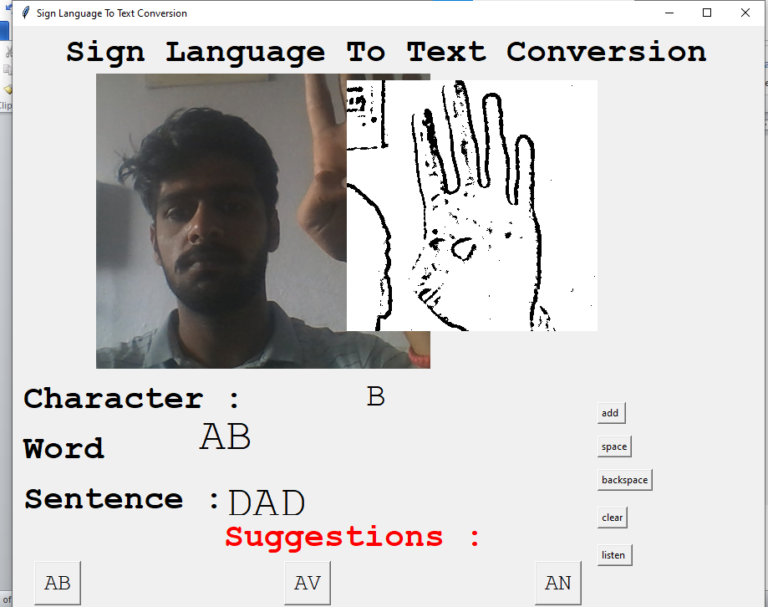

Dumb people find it hard to converse with people and do it using signs. What we did is store their signs with their meaning and build a model to help classify what the signs mean. The model performs well in real time and gives good accuracy. We also built a GUI to add what the words could be and translate whatever we say into speech.

Code Description & Execution

Algorithm Description

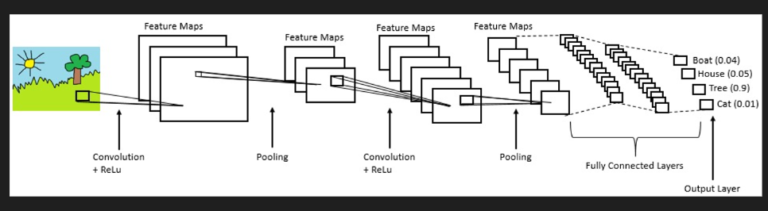

As we all are aware of the fact, how deep learning and transfer learning is revolutionizing the world with its immense capability of handling any kind of data and learning so efficiently. So, similarly we have applied the same concept by picking a deep learning model in a Convolutional neural network which basically works on the principle of having filters. Each convolutional layer has some specific filters to identify and extract the features from the input image and learn it and transfer it to other layers for further processing. We can have as many filters as possible in the convolutional layer depending on the data we are dealing on. Filters are nothing but feature detectors in the input data. Along with the convolutional layer we also have other layers which do further preprocessing such as Max Pooling, Activation function, Batch Normalization and dropout layer. These all contribute to the CNN model creation and along with the flatten and output layer. The reason we do flattening is to feed the output of the CNN model to the dense layer which gives us the probability of the predicted value.

Reference:

https://www.ibm.com/cloud/learn/convolutional-neural-networks

In the GUI, I have also added buttons like add, backspace, clear to form the word/sentence. The GUI also has an option of predicting words based on the letters selected which makes it easy to complete the word. It was done with the help of basic NLP tasks.

How to Execute?

So, before execution we have some pre-requisites that we need to download or install i.e., anaconda environment, python and a code editor.

Anaconda: Anaconda is like a package of libraries and offers a great deal of information which allows a data engineer to create multiple environments and install required libraries easy and neat.

Refer to this link, if you are just starting and want to know how to install anaconda.

If you already have anaconda and want to check on how to create anaconda environment, refer to this article set up jupyter notebook. You can skip the article if you have knowledge of installing anaconda, setting up environment and installing requirements.txt

1. Install necessary libraries from requirements.txt file provided.

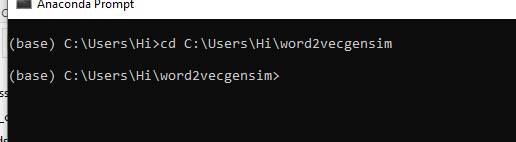

2. Go to the directory where your requirement.txt file is present.

CD<>. E.g, If my file is in d drive, then

- CD D:

- CD D:\License-Plate-Recognition-main #CHANGE PATH AS PER YOUR PROJECT, THIS IS JUST AN EXAMPLE

If your project is in c drive, you can ignore step 1 and go with step 2.

Eg. cd C:\Users\Hi\License-Plate-Recognition-main #CHANGE PATH AS PER YOUR PROJECT, THIS IS JUST AN EXAMPLE

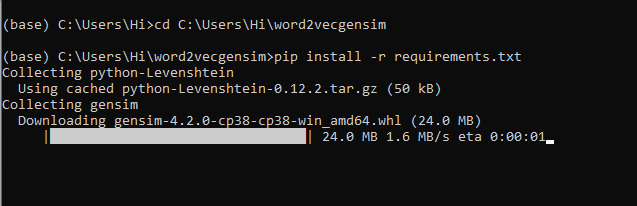

3. Run command pip install -r requirements.txt or conda install requirements.txt

(Requirements.txt is a text file consisting of all the necessary libraries required for executing this python file. If it gives any error while installing libraries, you might need to install them individually.)

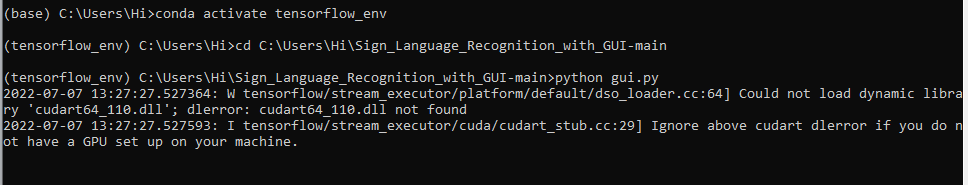

All the necessary files will get downloaded. To run the code, open anaconda prompt. Go to virtual environment if created or operate from the base itself and start jupyter notebook, open folder where your code is present.

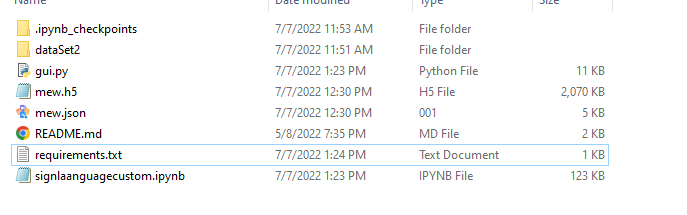

If you want to build your own model for detection, you can go through “signlanguagecustom.ipynb”

Data Description

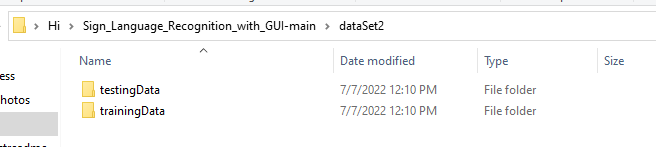

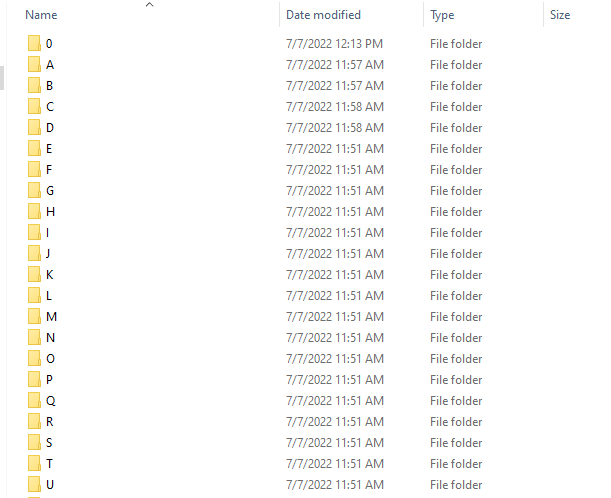

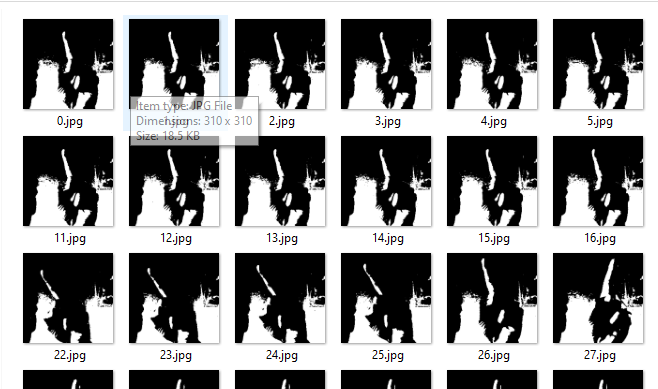

We create our own dataset for the project. The code has been given for it. It is advised to create your own dataset because I created the dataset in poor lighting conditions, if you create the dataset in good lighting conditions the model will perform extremely well. There are 2 folders created (training and testing) and in them you have 27 sub-folders created (a-z and 0).

Sample images from the dataset.

Results

Issues Faced

- Ensure you have all libraries installed.

- Give correct paths wherever necessary.

- Make sure you have the appropriate versions of tensorflow and keras.

- Create the dataset in good lighting conditions, as the model is susceptible to lighting conditions.

- Test the model in proper lighting environment as well.

Click Here To Download This Code And Associated File.