Abstract

Resume Analyses has been they’re for decades now, As, having well balanced and aesthetic resume can make or break your career. So, a personalized resume analyser helps us to evaluate and maintain a proper resume which might help us get a job in top MNC’s. In this resume parser project we explain you about the tools which helped us to analyse our resume and provide better feedback which can help us to increase the skills which we are lacking and also change the resume pattern which keeps us one step ahead of others.

Algorithm Description

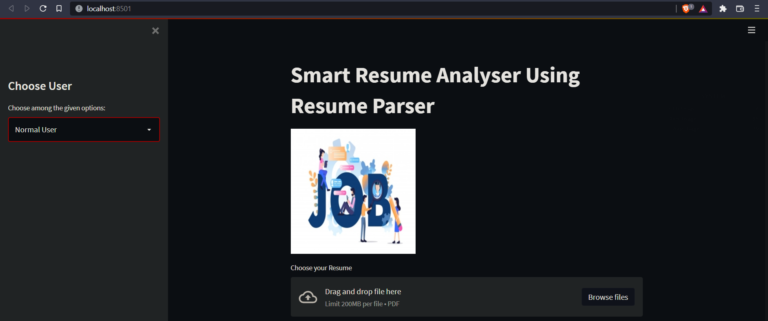

Streamlit: Stream lit is a small and easy web framework which helps us to build beautiful websites. The main reason for using stream lit is that it offers very user-friendly experience and we don’t need to have a prior knowledge of HTML, CSS and JAVASCRIPT. Streamlit is mostly used for deploying machine learning models without using any external cloud integrations. Some of the applications of Streamlit are it helps to deploy Machine learning and dee learning models, it can also help us to build a front end for a normal code. The output can be viewed as local server in your web browser.

Resume Parser: Resume parser is an NLP model which is used to get information from resumes such as details etc. then we have to train the NLP model according to dataset. Resume parsing helps recruiters to efficiently manage electronic resume documents sent electronically. Resume parsers are programs designed to scan the document, analyse it and extract information which are important to recruiters. They are extremely low-cost so that the data present in the resume can be searched, matched and can be displayed by recruiters.

Installing SQLITE Database

Ok, so as this resume parser project is integrated with database login support, we might need to install SQLite database in our system to make the code running. Trust me this will not take more than 5 min of your precious time, hang on.

- Visit the given link and download the SQLITE standard installer.

- After the download has ended, click on the .msi file and follow the all the necessary installation procedures.

- Click on next and make sure to check the boxes for making the shortcuts.

- Click on next and the installation procedures begins.

At last, click on finish to complete the setup procedure.

How to Execute?

Make sure you have checked the add to path tick boxes while installing python, anaconda.

Refer to this link, if you are just starting and want to know how to install anaconda.

If you already have anaconda and want to check on how to create anaconda environment, refer to this article set up jupyter notebook. You can skip the article if you have knowledge of installing anaconda, setting up environment and installing requirements.txt

- Install the prerequisites/software’s required to execute the code from reading the above blog which is provided in the link above.

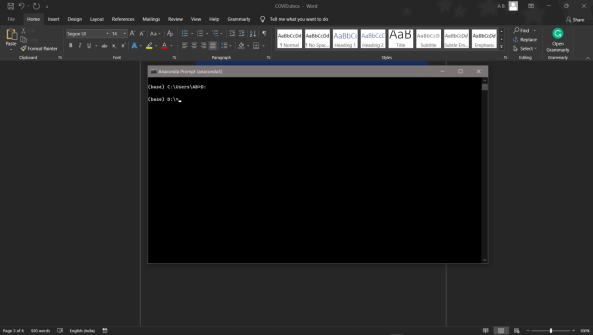

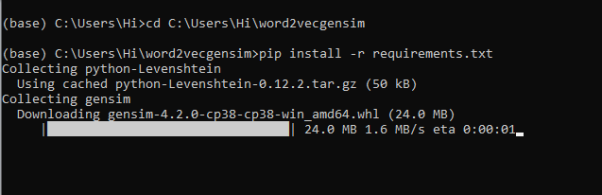

- Press windows key and type in anaconda prompt a terminal opens up.

- Before executing the code, we need to create a specific environment which allows us to install the required libraries necessary for our project.

- Type conda create -name “env_name”, e.g.: conda create -name project_1

- Type conda activate “env_name, e.g.: conda activate project_1

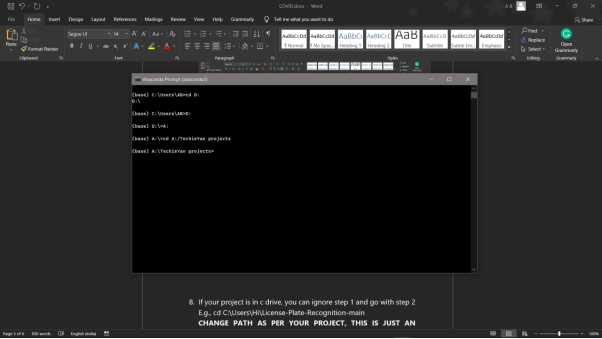

- Go to the directory where your requirement.txt file is present.

- cd <>. E.g., If my file is in d drive, then

- d:

7.cd d:\License-Plate-Recognition–main #CHANGE PATH AS PER YOUR PROJECT, THIS IS JUST AN EXAMPLE

8. If your project is in c drive, you can ignore step 5 and go with step 6

9. g., cd C:\Users\Hi\License-Plate-Recognition-main

10. CHANGE PATH AS PER YOUR PROJECT, THIS IS JUST AN EXAMPLE

11. Run pip install -r requirements.txt or conda install requirements.txt (Requirements.txt is a text file consisting of all the necessary libraries required for executing this python file. If it gives any error while installing libraries, you might need to install them individually.)

12. To run .py file make sure you are in the anaconda terminal with the anaconda path being set as your executable file/folder is being saved. Then type python main.pyin the terminal, before running open the main.py and make sure to change the path of the dataset.

13. If you would like to run .ipynb file, Please follow the link to setup and open jupyter notebook, You will be redirected to the local server there you can select which ever .ipynb file you’d like to run and click on it and execute each cell one by one by pressing shift+enter.

Please follow the above links on how to install and set up anaconda environment to execute files.

Note: There are 4 different files each seeves different purpose such as,

- Preprocess.ipynb consists of all the data cleaning steps, which are necessary to build a clean and efficient model.

- main.ipynb consist of major steps and exploratory data analysis which allow us to understand more about the data and behavior of it.

- Variable_Selction.ipynb consists of data reduction/dimensionality reduction techniques such as Sequential feature selector method to reduce the dimensions in the data and compare the model scores before and after dimensionality reduction.

- Combined_main_var.ipynb consists of combination of main.ipynb and variable_selection.ipynb to make it more clear and understable for the audience.

Please follow the above sequence if you would like to execute and the files require good system requirements to run.

Make sure to change the path of the dataset in the code

Data Description

Specifically, no external dataset was used for this project. Some external links have been provided in the code to make a recommendation system which recommends courses to the people who use the application, based on their resume score.

Final Results

Issues you may face while executing the code

- We might face an issue while installing specific libraries, in this case, you might need to install the libraires manually. Example: pip install “module_name/library” i.e., pip install pandas

- Make sure you have the latest or specific version of python, since sometimes it might cause version mismatch.

- Adding path to environment variables in order to run python files and anaconda environment in code editor, specifically in any code editor.

- Make sure to change the paths in the code accordingly where your dataset/model is saved.

Refer to the Below links to get more details on installing python and anaconda and how to configure it.

https://techieyantechnologies.com/2022/07/how-to-install-anaconda/

Note:

All the required data has been provided over here. Please feel free to contact me for model weights and if you face any issues.

Click Here For The Source Code And Associated Files.

https://www.linkedin.com/in/abhinay-lingala-5a3ab7205/

Yes, you now have more knowledge than yesterday, Keep Going.