Space Invaders Game

Abstract

Space Invaders game can be really exciting for gamers and non-gamers as one gets to enjoy the space experience from spaceships to the amazing view of space. Video games are an integral part of popular culture. The video game industry faces challenges with the increase in players’ numbers and application areas including serious games. The increase in the number of players includes disabled players. Space Invaders is a classic Atari game that we all have played once upon a time in our childhood and many youngsters and adults like this type of games very much. Today we are going to automate the game using the famous Reinforcement Learning algorithm Deep Q learning. Space invaders game can be created by the Pygame library with just a few lines of code, a game class is created to perform the functions. The algorithms in this type of projects help a lot in building accurate and efficient programs. This game is just like other normal games out there but, you are going to create it. This game can be very exciting to play and enjoy, apart from the game the whole structure of the game can also be changed according to one personal likelihood.

Programming Methodology

Python Pygame library will make it easier to design and built.

The process for accomplishing the above task is:

- We define our Deep Q-learning neural network. This is a CNN that takes in-game screen images and outputs the probabilities of each of the actions, or Q-values, in the Ms-Pacman gamespace. To acquire a tensor of probabilities, we do not include any activation function in our final layer.

- As Q-learning require us to have knowledge of both the current and next states, we need to start with data generation. We feed preprocessed input images of the game space, representing initial states s, into the network, and acquire the initial probability distribution of actions, or Q-values. Before training, these values will appear random and sub-optimal. Note that our preprocessing now includes stacking and composition as well.

- With our tensor of probabilities, we then select the action with the current highest probability using the argmax () function, and use it to build an epsilon greedy policy.

- Using our policy, we’ll then select the action a, and evaluate our decision in the gym environment to receive information on the new state s’, the reward r, and whether the episode has been finished.

- We store this combination of information in a buffer in the list form <s,a,r,s’,d>, and repeat steps 2–4 for a preset number of times to build up a large enough buffer dataset.

- Once step 5 has finished, we move to generate our target y-values, R’ and A’, that are required for the loss calculation. While the former is simply discounted from R, we obtain the A’ by feeding S’ into our network. With all of our components in place, we can then calculate the loss to train our network.

- Once training has finished, we’ll evaluate the performance of our agent graphically and through a demonstration.

Code Description & Execution

How to Execute?

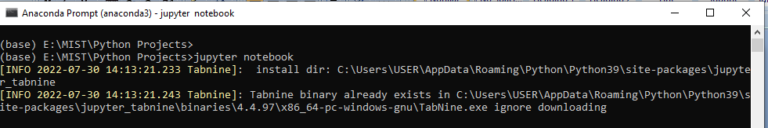

Step 1 :

You need to open the jupyter notebook in the path directory on the anaconda prompt shell.

In my case

To perform the above step you already need to have knowledge of installing jupyter notebook and running anaconda shell, if you don’t know how to install or run jupyter notebook check our previous articles about it.

Step 2 :

Open .ipynb file from the folder

–

Step 3:

Install required libraries using command pip install (library_name) for example Pip install tenserflow. Required libraries are written in code please refer

Step 4:

Now you need to run each cell individually by run command or by using shortcut key shift+enter This part is crucial you need to wait for each cell to execute properly.

Output

Issues you may face

- While giving right path in the Anaconda prompt shell to open jupyter notebook in correct directory or opening the correct ipynp file.

Note:

All the required data has been provided over here. Please feel free to contact me for model weights and if you face any issues.

https://techieyantechnologies.com/contact/

Yes, you now have more knowledge than yesterday, Keep Going.

Click Here To Download This Code And Associated File.